Microsoft has announced a new deepfake detection tool, known as Microsoft Video Authenticator to help in spotting synthetic images and videos that are AI-generated on the internet.

While Deepfake technology is extensively employed in the swapping of people’s faces in videos to make them utter things that was never said by such people, and often used by scammers. Albeit, the technology also has its positive sides, as the development of deep generative models brings new possibilities in healthcare, with the growing concerns about protecting the privacy of patients.

Using the deepfake technology, a hospital with adequate computational power could recreate an entirely imaginary population of its virtual patients, thus eliminating the need to share the data of real patients.

How Microsoft Video Authenticator Deepfake Detection works?

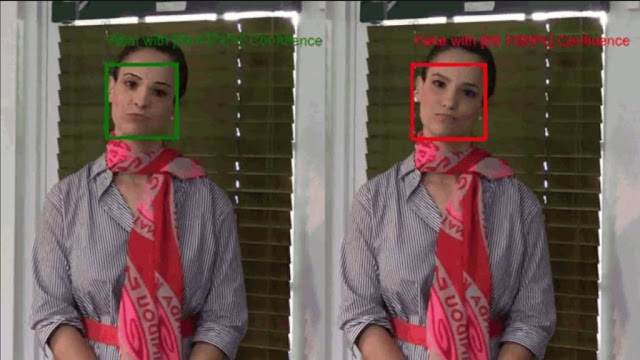

Microsoft Video Authenticator is developed by Microsoft Research, in collaboration with the Responsible AI team and AETHER committee. It analyzes any given image or video to come up with what the company calls "confidence score", which is a percentage score that shows whether the particular media content has been manipulated.

The tool works by detecting the actual boundary of the deepfake and subtle fading or greyscale elements which might not have been easily detectable by the human eye.

And it integrates with the Microsoft Azure cloud service and allows content publishers to add digital hashes and certificates to their content, which data is then made readable by a browser extension (reader) to verify the authenticity of the content.

Some other complimenting Deepfake detection efforts

Google released a large dataset of visual deepfakes in late 2019, in collaboration with Jigsaw, which has been incorporated into the Technical University of Munich and University Federico II of Naples’ new FaceForensics benchmark, with the aim of directly complimenting deepfake detection efforts.

Moreover, as Deepfake technology is getting more advanced, and thus becoming harder to detect even by the experts; it then becomes pertinent to pitch AI against AI in an attempt to help in the spotting of deepfakes.

No comments